Zeyu Wang, Yao-Hui Li, 01 May 2025

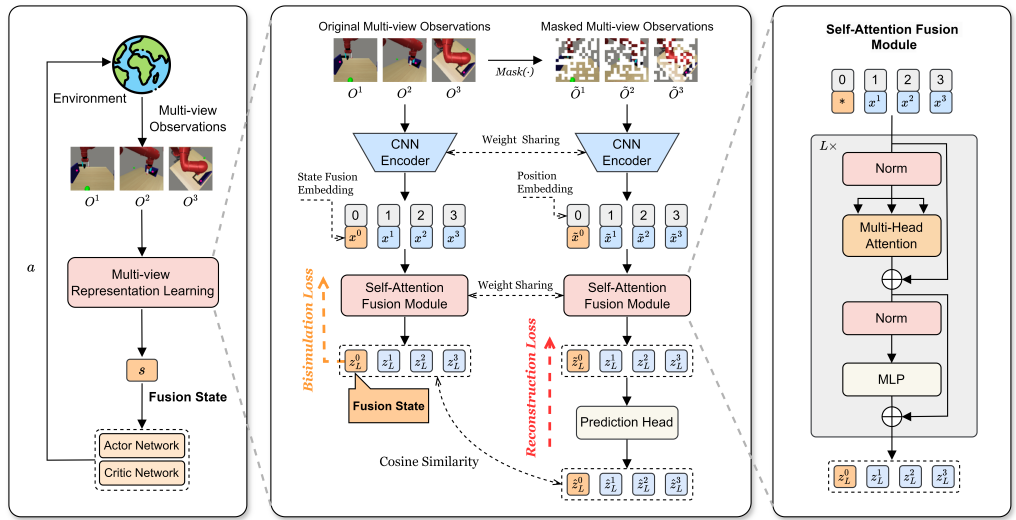

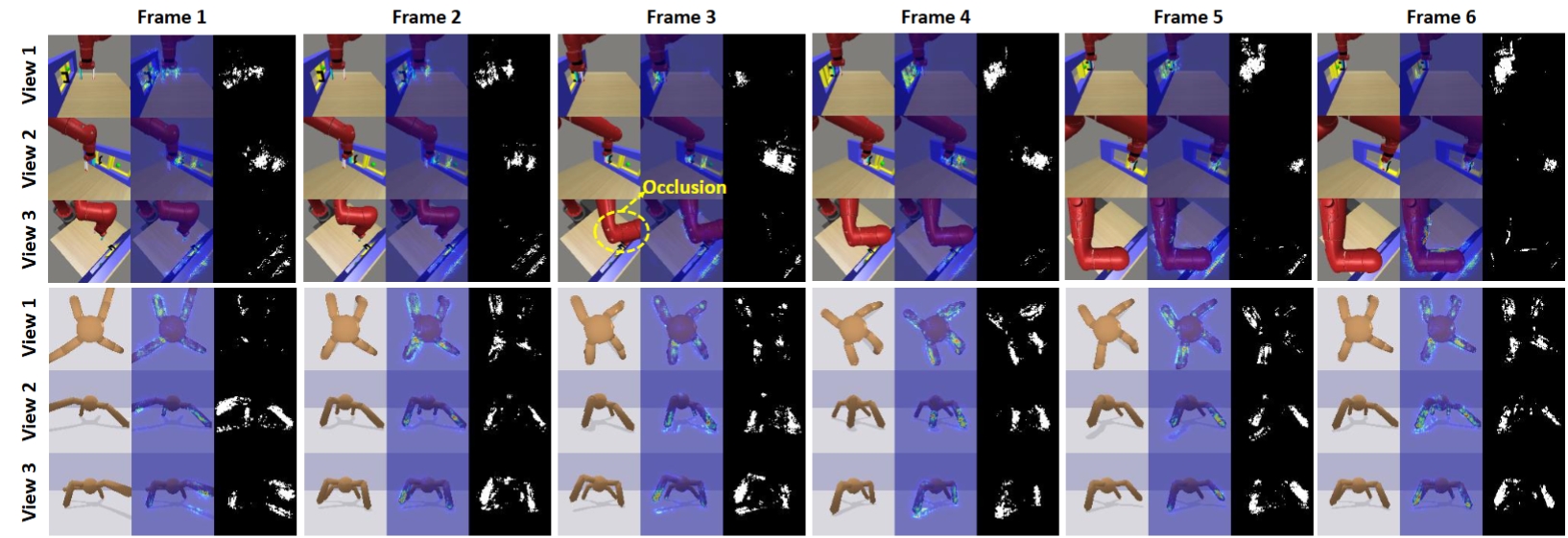

In this study, we designed a novel Multi-View Reinforcement Learning (MVRL) framework, Multi-view Fusion State for Control (MFSC), to address the challenge of learning compact and task-relevant representations from multi-view observations, particularly when facing redundancy, distractors, or missing views. This is achieved by firstly incorporating bisimulation metric learning into the MVRL pipeline, and secondly introducing a multiview-based mask and latent reconstruction auxiliary task to effectively exploit shared information and significantly improve the agent's robustness to missing observations.

MVRL provides agents with multi-view observations to perceive environments more effectively. The goal is to extract compact and task-relevant latent representations from these views for use in control tasks. However, this is challenging due to redundant or distracting information and missing views.

Our work introduces Multi-view Fusion State for Control (MFSC), a method that uniquely integrates bisimulation metric learning into MVRL to learn better task-relevant representations. We also propose a multi-view-based mask and latent reconstruction auxiliary task that leverages shared information across views and enhances robustness to missing data.